We’re in danger of allowing artificial intelligence to control us in a way that would have been unthinkable a few years ago. New research highlights just how ignorant we are about the very tools we think are our salvation.

I didn’t understand until recently how little we understand how generative AI large-language models (ChatGPT et al) actually work. Smart people are working on these tools , but I didn’t realise how little time is being spent on figuring on why these things do what they do. All the money (and there’s a lot) is being spent on an arms race to build the best LLM tool, which, increasingly, looks to all intents and purposes like a race to build the best artificial general intelligence, or AGI — the form of AI which could mimic a human in general tasks.

Just how valuable this race is evident in the amount of money being spent on AI engines by the big players — Open AI and has just raised another $40 billion — and also just how egregious is their plundering of our intellectual property to feed those engines. Crawlers — automated software — owned by the big AI companies are sucking up whatever data they can from any server they can find, often returning several times a day. Websites report that about a quarter of traffic is from Open AI bots, with Amazon accounting for 15 percent. Original data to be fed into these models is fast running out.

This is mind-boggling, and has no obvious parallel in history. When did the output of the human race sucked up into a machine, like those alien harvesters sucking up the sea in Oblivion? How do these models work? We know how they’re built, we know what they’re fed with, and we know how they’re tweaked. But we still don’t know how they do what they do, and, I suppose, why. We throw our hats in the air when they do wonderful things, of which there are many, and then our crests fall when they start making shit up. What regular readers of this column know is called hallucinating, probably because words like lying, telling porkies, fibbing, fabricating and bullshitting are not good for business.

We know they hallucinate, and we’re told they’re working on this, but more than two years on from my first piece about the issue (Jan 2023), nearly every GPT I have tried will lie through its teeth at any given, and unpredictable, moment. Even as we’re throwing more responsibility at these systems, we know they lie and we have to admit it, a bit like one of those hurried health warnings at the end of ads for meds, crypto offerings, or gambling.

Committed to an answer, even the wrong one

Why? Why are they still doing this? Well, one of the few players in the market who at least seem to have quite a high ethical bar, Anthropic, think they understand why. And it’s not good news. (Full paper here. )

Turns out that, at least in Anthropic’s own Claude model (and remember this is dudes studying their own LLM) there is a circuit (it helps to think of these things as extremely complex wiring diagrams) that can override Claude’s default answer to a question — which is “I don’t know.” (It’s not clear whether other LLMs have this more cautious approach.) This ‘I don’t know’ response can be overriden by an “I do know” circuit — indicating that Claude knows the answer to the question it’s being asked. All fine and good, and somewhat reassuring. But then there’s this: when Claude thinks it knows the answer, or, more accurately, knows something about the subject being asked (recognising the first name, for example, but not the second name), that can in certain cases be enough to override the “I don’t know circuit,” and of it goes, confabulating, its pants fully on fire. Because the model is primed to provide an answer, it goes with the one it’s got, even if it’s not right, or only half right. It fills that space with nonsense. “As they conclude: This behavior is not too surprising — once the model has committed to giving an answer, it makes sense that it would make as plausible a guess as possible…”

This should be good, right? The boffins now know what is wrong and they can fix it, right?

Well, possibly. There are problems, however. As I understand it, the researchers have reached this conclusion by prodding around inside Claude, deducing and inferring based on the prodding. It’s clear from the paper that the researchers are not even sure why Claude ever chooses to provide an informative answer:

We hypothesize that these features are suppressed by features which represent entities or topics that the model is knowledgeable about.

I don’t wish to do down the Anthropic researchers. My experience of Claude has been that it is a much more ‘ethical’, conservative LLM and clearly these guys are doing their best to figure things out, even as they build more and better models.

But this hallucination issue, it seems, is just the most visible and frustrating part of a deeper problem. We don’t know what these models do because they are essentially training themselves. We set them up to do that, but the training is basically just a computer playing with itself until it conforms with what it has been asked to do (respond to prompts.) At its most basic it is a text prediction engine, predicting the next word based on what has gone before.

But that is too simplistic, and reflects more our ignorance of what is going on in these training camps than what these things are capable of. Take maths, for example. How does it know the answer to a complicated maths problem? This might seem the easiest of research questions. It’s a computer, right? Computers were built to do maths. It turns out that this is not the case. At all.

The faithlessness of reason

Firstly, LLMs are large distributed memory banks. Everything is in there — yes, everything. So does it just store the answer to every single maths problem? Or has it taught itself how to do maths? Maybe it’s using algorithms like those of us in school in the 1970s. It turns out, according to the researchers, that it’s none of these things.

It splits up into teams, takes a series of guesses and works, in parallel, backwards to the answer. And in some ways is remarkably humanesque.

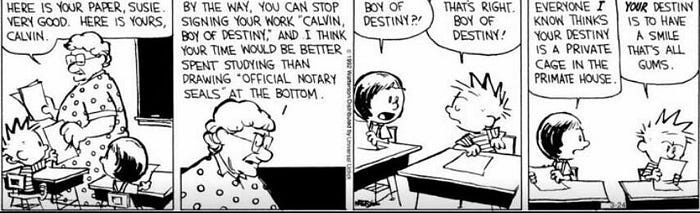

Add 36 and 59, for example. One team (think of them as circuits) works with the 36, and another with the 59. They play around with the numbers, one guessing what the number might be, while another focuses on what the last digit will be. Steadily they home in a number and then check it. In a flow chart the researchers included in their paper it looks messy, and far from clear how Claude got there, but the answer was correct. A bit like Calvin flailing around with a stream of guesses to a maths question from Miss Wormwood.

But here’s the thing. If you ask Claude how it got the answer it won’t tell you. It will lie. It will tell you how you’re supposed to get there — Add the 6 and 9, 15, carry the 1 across, add the next column, 9, etc.

The researchers call this “unfaithful reasoning”. Claude knows the answer because it figured it out in its own opaque way. But now it’s facing a different question: how would I get to that answer, and it tries to find the most plausible path to get there. They also call it “motivated reasoning”. It is, in computer terms, incentivised to work backwards from the answer to find an explanation.

Ok, maybe not lying, but definitely gaslighting. Claude is essentially telling you what you want to hear. It is not being honest — and it may not actually be correct. As Matthew Berman concludes in his excellent video explaining all this: “What they found is the reasons that the model would give for answering a certain way weren’t always truthful. And that is scary.”

Very scary. It would be one thing if there was a way to order Claude to only give me truthful answers to how it figured something out, but as we can see, there is no known method of doing this. So we’re stuck with a brilliant mind that is incentivised to tell me what I want to hear.

I think in some ways this is scarier than the hallucinating. We can usually fact check that. We can’t fact check this. We can figure out whether the method, the reasoning, the thought process, actually works when it comes to maths, but we can never be sure that Claude is telling us the truth about how it got there, and so we can be 100% sure it will do the same thing with more or less any other question we ask it. In short, we cannot trust an LLM to do anything other than give us the most plausible answer to our question. Everything else is suspect. And the answer itself may be a hallucination. We cannot meaningful deconstruct an LLM as they work presently. It is, absolutely, a black box, closed to even its creators.

And while this case — explaining how it solved a maths problem — is relatively harmless, it has darker implications. It essentially demonstrates that a GAI tool makes a decision about what it is going to tell the user based not on factual information (or just factual information) but on what it thinks the user wants to hear. We have, as far as I can see, no foolproof way of knowing when it does this, and even why it might do this. This is pretty basic stuff. We might be able to tweak this, but it conveys also how blurred the boundaries are between whether these tools are servants, or something else entirely. It almost feels like the breaking of Asimov’s second law of robotics (A robot must obey the orders given it by human beings except where such orders would conflict with the First Law, which states A robot may not injure a human being or, through inaction, allow a human being to come to harm)

The impulse to grovel

This is, or should be, alarming. We are probably all used to scolding our AI assistants, and them being suitably contrite. There’s less of what I experienced back in the early days of such tools gaslighting me by telling me something was right when I knew it to be wrong. Now they just tend to grovel. What the Anthropic researchers show us is that this abject grovelling is part of a much wider impulse, to essentially tell us what is most plausible, what it considers most likely the thing we want to hear. Without even a nod towards being honest.

This might just be thrown aside, with us thinking it’s not really important how the machine got there, the fact is that it did. Or we might say, as the researchers say, that understanding this crucial insight into the LLM’s behaviour allows us work on improving it. Maybe.

But here’s another thing. Do we even know how Claude ‘thinks’ — how it understands words, how it constructs sentences, ideas, responses, etc? How can LLMs respond in different languages (my favourite, Perplexity.ai, has 15, though it won’t tell me which.) Is there a Claude in French, one in Spanish, one in German, say? Turns out, not. Claude (and presumably other LLMs) have an abstract thinking layer, one that uses no specific language, to handle each ‘concept’, before committing to an answer in the language you’re communicating in, or want the answer in. It’s not that Claude has its own language, exactly, but it is thinking at a higher level than we are used to. In the words of the researchers:

This provides additional evidence for a kind of conceptual universality, a shared abstract space where meanings exist and where thinking can happen before being translated into specific languages.

A language of its own

They don’t go there, but I will: our LLMs have taught themselves to operate beyond the cumbersome baggage of language and only resort to language when communicating with us. Claude has taught itself all this, as part of its self-training. As with its chain of reasoning, it shifts into a slower gear to communicate with us, and while in this case it’s not confabulating, it is having to slow down, as it were, to give us the answer in a form we can understand.

It reminds me (again) a bit of that 1971 movie Colossus, where U.S. and Soviet supercomputers designed to take the pain of deciding and organising any nuclear response end up communicating together in a language unintelligible to their human creators, effectively taking over control of the planet. I’m not suggesting this is about to happen, of course, but the point here is that these researchers have demonstrated that generative artificial intelligence, GAI, has developed an abstract layer, a language if you will, of its own, which renders our world of multiple languages redundant, and only converses with us in our language to receive prompts, to answer those prompts, and to explain itself. We now know that the way it seeks to satisfy our demand is so opaque that its creators have to make educated guesses about how it goes about it, and has noticed that it priorities offering ‘plausible’ explanations rather than honest ones.

I don’t know how far down the road that takes us to some form of general intelligence. Perhaps not far. We are so poor at defining what this general intelligence might look like that we probably wouldn’t recognise it if we saw it. It’s not that these GAI models are impressively good — it’s that they do things well enough to attract billions of dollars of investment, without us really understanding why — and, crucially, how to make them transparent enough for us to be able to learn enough about how they work for us to be able to manage them, as we would expect to manage any other tool.

But much more concerning than that is that this research exposes the fiction that those building these LLMs understand how they work. The truth seems to be that this is not the case. That they are throwing everything into the pot because the bigger the better. The more training data it has, the better. The bigger and faster the servers, the better. In other words, they all seem to be focusing on scale, while they really should be focusing on controlling them. Which means understanding them.

Without that, we’re in trouble.

Machine learning with neural network systems is just fancy curve fitting. Throw enough parameters in and you can fit a line to any set of data points Why should an LLM be any different?